Engineering Insight for Humanoid Robotics Emotions and Violence with Reference to “System Error 1378”- Juniper Publishers

Juniper Publishers- Journal of Robotics

Abstract

What kinds of social relationships can people have

with computers are there activities that computers can engage in that

actively draw people into relationships with them. What are the

potential benefits to the people who participate in these human-computer

relationships? To address these questions researchers introduces a

theory of Relational Agents, which are computational artifacts designed

to build and maintain long-term, social-emotional relationships with

their users. These can be purely software humanoid animated agents--as

developed in this work but they can also be non-humanoid or embodied in

various physical forms, from robots, to pets, to jewelry, clothing,

hand-held’s, and other interactive devices. Central to the notion of

relationship is that it is a persistent construct, spanning multiple

interactions; thus, Relational Agents are explicitly designed to

remember past history and manage future expectations in their

interactions with users [1]. Finally, relationships are fundamentally

social and emotional, and detailed knowledge of human social psychology

with a particular emphasis on the role of affect--must be incorporated

into these agents if they are to effectively leverage the mechanisms of

human social cognition in order to build relationships in the most

natural manner possible. People build relationships primarily through

the use of language, and primarily within the context of face-to-face

conversation. Embodied Conversational Agents--anthropomorphic computer

characters that emulate the experience of face-to-face

conversation--thus provide the substrate for this work, and so the

relational activities provided by the theory will primarily be specific

types of verbal and nonverbal conversational behaviors used by people to

negotiate and maintain relationships. This article is also intend if

level of Artificial Intelligence reach over Natural Intelligence (Human

Intelligence), what would be happen, if System Error 1378 (AI

malfunction error) occur one day .i.e. robotic violence due to human

like emotion in Robots/Humanoid [2].

Keywords: Humanoid; Robotics Emotions; Robotics Violence; System Error 1378

Introduction

Humans take a certain posture in their communication.

Can take example, when mankind are happy or sad, cheerful, take a

posture in which the activities and behaviour showing through body

language like moving and open arms etc. When they are angry, they square

the shoulders. When they are tired or sadness, they shrug the shoulder

or close the arms. That’s

why; the emotion and mental condition are closely related to the human

posture, gestures, facial expressions and behavior exhibits through body

language. And, human obtain many information from partner’s posture in

their communication [3]. In this situation, the human arms play an

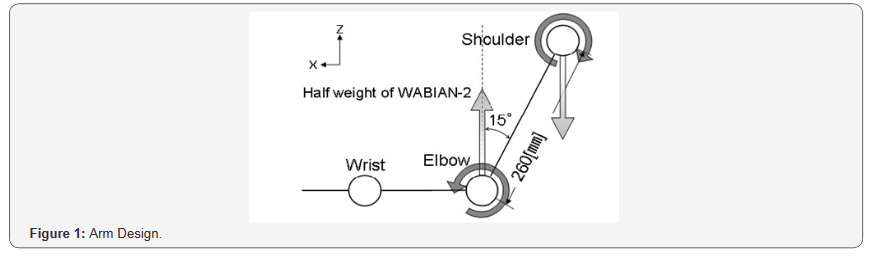

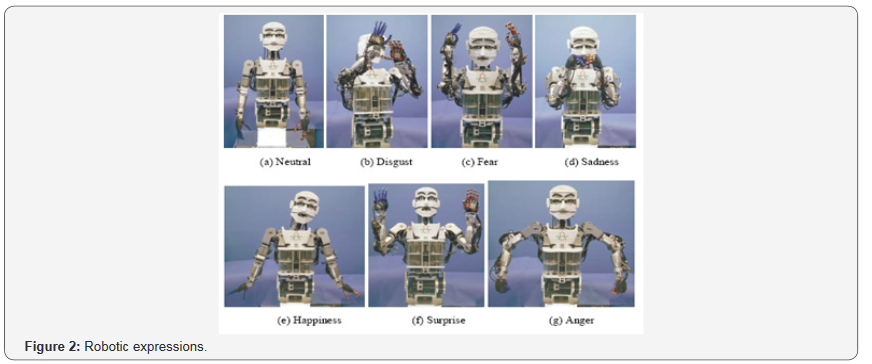

important role. Figure 1 & 2 below shows the emotional expression

exhibited by WE-4RII [4].

We can control the generally 6-DOFs (Degree of Freedom)

robotic arms’ from its tip position precisely as like human’s

arm. But, all their joint angles are fixed according to the inverse

kinematics. By the way, humans have 7-DOFs arms consisting

of 3-DOFs shoulder, 1-DOF elbow and 3-DOFs wrist. However,

it’s considered that there is a rotational center in the base of

shoulder joint, and the shoulder joint itself can moves up and

down positions as well as moves to and fro, back & forth so that

humans square and shrug their shoulders. We considered that

these motions played a very important role in the emotional

expressions. Therefore, researchers trying to develop more

emotional expressive human-like body movements, gestures

feelings, love and affection response supportive movements in

humanoid robot arms than the usual 6-DOFs robot arms [5].

Robotic Software Architecture Model

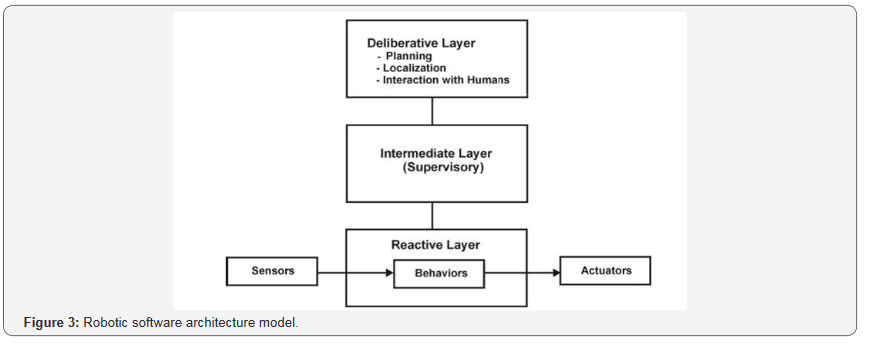

(Figure 3) Risk assessment is an interdisciplinary subject,

which runs together psychological, ethical, legal, and economic

considerations. A major problem in risk assessment is the

confusion between popular concepts of risk from robots (the

‘subjective risk’), which has largely been made irrational by

the various fictional depictions autonomous robots destroying

humankind and running amok (as in Terminator and I, Robot,

among many other movies) and the actual objective risk of

deploying robots, i.e., what rational basis is there for worry?

First, let us define risk simply in terms of its opposite,

safety: risk is the probability of harm; and (relative) safety

is (relative) freedom from risk. Safety in practice is merely

relative, not absolute, freedom from harm, because no activity

is ever completely risk-free; walking onto one’s lawn from

inside one’s house increases the (however small) risk of death

by meteorite strike [6]. Hence, risk and safety are two sides of

the usual human attempt to reduce the probability of harm to

oneself and others [7].

And finally, some have raised risks of a more abstract sort,

indicating the rise of such autonomous robots creates risks

that go beyond specific harms to societal and cultural impacts. For instance, is there a risk of (perhaps fatally?) Affronting

human dignity or cherished traditions (religious, cultural, or

otherwise) in allowing the existence of robots that make ethical

decisions? Do we ‘cross a threshold’ in abrogating this level of

responsibility to machines, in a way that will inevitably lead to

some catastrophic outcome? Without more detail and reason

for worry, such worries as this appear to commit the ‘slippery

slope’ fallacy. But there is worry that as robots become ‘quasipersons’,

even under a ‘slave morality’, there will be pressure

to eventually make them into full-fledged Kantian-autonomous

persons, with all the risks that entails [8].

Background for Work

Many people thinking about Spock, the half-Vulcan and

half-human character of Star Trek, as the supporter saint of

computer science. They highly intelligent, highly rational,

highly unemotional, attractive to women etc. A famous

image is that Spock didn’t have any emotions: after all Spock

almost never expressed emotion, excepting his characteristic

pronouncement of the word “fascinating” upon thoughtful

something new. In fact, as the actor Leonard Nimoy describes

in his book the character Spock did have emotion; he was just

very good at suppressing its expression. Majority people think

that Spock do not having emotion. When someone never used

to expresses emotion, it is appealing to think that emotion is

not there [9-11]. In affective computing, we can separately

examine functions that are not so easily separated in humans.

For example, the Macintosh OS exhibits a smile for years upon

successful boot-up. But few people would confuse its smile -

albeit an emotional expression - with a genuine emotional

feeling. Machines can take the emotional appearance well

mannered, with having dissimilarities in feelings similar to

those we would have: they can generate separate expression,

gestures and postures from feeling. With a machine it is easy

to see how emotion expression does not imply “having” the

underlying feeling.

Machines that might really “have” feelings are the key

area of affective computing and love affection engineering

that coined serious doubt about in 1997 book titled Affective

Computing. We think the discussions there, and in a later book

chapter on this topic are still timely and will not plan to add

to them here. Researchers in the last decade have obtained

dozens of scientific findings illuminating important roles of

emotion in intelligent human functioning, even when it looks

like a person is showing no emotion. These findings possible

to restructure, redesign and reengineering with scientific

understanding of emotions and can better motivation to

work to young researchers with consider that emotional

mechanisms might be more valuable than previously believed.

Consequently, a number of researchers have charged ahead

with building machines that have several affective abilities,

especially: recognizing, expressing, modeling, communicating,

and responding to emotion. And, insight these domains,

various criticisms and challenges have arisen. Present work

addresses such matters. The term emotion refers to relations

among external incentives, thoughts, and changes in internal

feelings, as weather is a super ordinate term for the changing

relations among wind velocity, humidity, temperature,

barometric pressure, and form of precipitation. In general, a

unique combination of these meteorological traits buildup

a storm, a tornado, a blizzard, or a hurricane events and that

are co-relate to the temporary but intense emotions of fear,

joy, excitement, disgust, or anger. But wind, temperature, and

humidity vary continually without producing such extreme

combinations [12].

Ethics

With example, if your boss yells at you, is it wrong to detect

his angry voice, or to recognize he is angry? Is it unethical, once

you’ve recognized his anger, to try to take steps to alleviate

his anger (or to “manipulate” it, perhaps by sharing new

information with him, so that he is no longer angry? One can

imagine of scenario where the foregoing replies are “no”: e.g. He

is shouting at you directly, and clearly wants you to recognize it

and take steps in response. And, one can imagine the answers

might be more complex if you surreptitiously detected his anger,

and had nefarious purposes in mind by attempting to change

it [13]. Humans routinely scanned, recognize, and respond to

emotions using cognitions and manipulating them in ways that

most would consider highly ethical and needful. Playing music

to cheer up a friend’s mood, eating chocolate, exercising to perk

one up, and other manipulations count among many that can be

perfectly acceptable. That said, unscrupulous uses by people

and by people via machine of affect detection, recognition,

expression, and manipulation. Some of these, including

ways affective machines might mislead customers, assuage

productive emotional states, and violate privacy norms, are

discussed in Picard and in Picard and Klein [14].

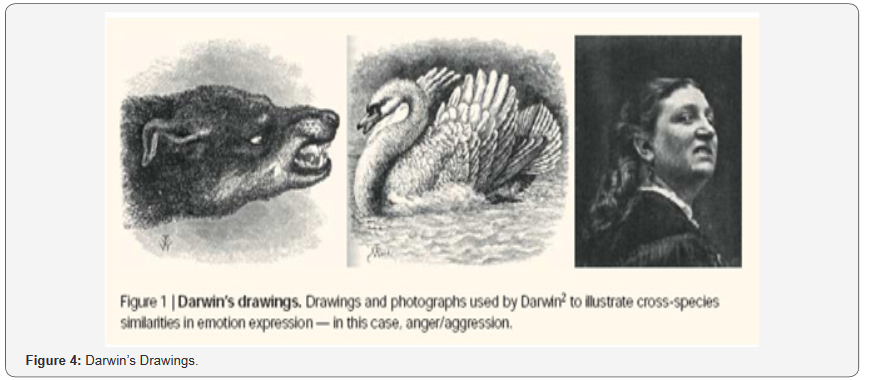

In 1872, Charles Darwin Published a ground breaking book -

The Expression of the Emotions in Man and Animals 2

It was the conclusion about to 34 years of work on emotion

and emotional intelligence and resource two important

contributions to the field. The first was the notion that animal

emotions are homologues for human emotions- a logical

extension of Darwin’s early works on evolution [15]. Darwin

was one of the most promised people who show this by

comparing and analyzing countless sketches and photographs

of animals and people in different emotional states to reveal

cross-species similarities as shown in below figure. .He also

proposed that many emotional expressions in humans, such as

tears when upset or baring the teeth when angry, are vestigial

patterns of action. Darwin second contribution was the

proposal which limit to set of fundamental or’ basic’ emotions

are present across species and across cultures including anger,

fear, surprise and sadness and all .These two ideas had a

profound influence on affective neuroscience by promoting the use of research in animals to understand emotions in humans

and by giving impetus to a group of scientists who espoused

the view that different basic emotions had separable eneural

substrates [16] (Figure 4).

Can Robots Have Emotions

Science fiction is full of machines that have feelings .In

2001: A Space Odyssey, the onboard computer turns against

the crew of the spaceship Discovery, and utters cries of pain

and fear when his circuits are finally taken apart. In Blade

Runner, humanoid robots are distressed to learn that her

memories are not real, but have been implanted in her silicon

brain by her programmer. In Bicentennial Man, Robin William

splays the part of a robot that redesigns his own circuitry so

that he can experience the full range of human feelings. These

stories achieve their effect impart because the capacity for

emotion is often considered to be one of the main differences

between humans and machines. This is certainly true of the

machines we know today. The responses we receive from

computers are rather dry affairs, such as “system error 1378”.

People sometimes get angry with their computers and shout

at them as if they had emotions, but the computers take no

notice. They neither feel their own feelings, nor recognize

yours [17]. The gap between science fiction and science fact

appears vast, but some researcher’s inartificial intelligence

now believes it is only a question of time before it is bridged.

There is huge research in progress in each month in the domain

of Affective Computing and an advance result comes in the

form of primitive emotional machines. However, some critics

argue that a machine could never come to have real emotions

like ours. At best, they claim, clever programming might allow

it to simulate human emotions, but these would just be clever

fakes [18].

What are Emotions

In humans and other animals, we tend to call behavior

emotional when we observe certain facial and vocal expressions

like smiling or snarling, and when we see certain physiological

changes such as hair standing on end or sweating. Since most

computers do not yet possess faces or bodies, they cannot

manifest this behavior. However, in recent years computer

scientists have been developing arrange of’ animated agent

faces’ [19], programmers that generate images of humanlike

faces on the computer’s visual display unit. These images can be

manipulated to form convincing emotional expressions. Others

have taken things further by building three-dimensional

synthetic heads. Cynthia Breazealand colleagues at the

Massachusetts Institute of Technology (MIT) have constructed

a robot called’ Kismet’ with moveable eyelids, eyes and lips. The

range of emotional expressions available to Kismet is limited,

but they are convincing enough to generate sympathy among

the humans who interact with him. Breazeal invites human

parents to play with Kismet on a daily basis. When kismet alone

seems to be sad and when with someone company seems to be

happy and also able to understand other human expressions

and response accordingly. Does Kismet have emotions, then?

It certainly exhibits some emotional behavior, so if we define

emotions in behavioral terms, we must admit that Kismet has

some emotional capacity [20].

Presently Kismet doesn’t exhibits full of human like

emotional behaviour but seems to be towards developing phase

and one day as similar like human beings. Chimpanzees do not

display the full range of human emotion, but they clearly have

some emotions. Dogs and cats have less emotional semblance

to us, and those doting pet-owners who ascribe the full range

of human emotions to their domestic animals are surely

guilty of anthropomorphism. There is a whole spectrum of

emotional capacities, ranging from the very simple to the very

complex. Perhaps Kismet’s limited capacity for emotion puts him somewhere near the simple end of the spectrum, but even

this is a significant advance over the computers that currently

sit on our desks, which by most definitions are devoid of any

emotion whatsoever. As affective computing progresses, we

may be able to build machines with more and more complex

emotional capacities. Kismet doesn’t have voice and feelings in

voice mechanism but promising advanced research change this

dream into reality soon. Today’s speech synthesizers speak in

an unemotional monotone. In the future, computer scientists

should be able to make them sound much more human by

modulating nonlinguistic aspects of vocalization like speed,

pitch and volume [21]. Facial expression and vocal intonation

are not the only forms of emotional behavior. We also infer

emotions from actions. When, for example, we see an animal

stop abruptly in its tracks, turn round, and run away, we infer

that it is afraid, even though we may not see the object of its

fear. For computers to exhibit this kind of emotional behavior,

they will have to be able to move around. In the jargon of

artificial intelligence, they will have to be “mobots” (mobile

robots). In lab at the University of the West of England, there

are dozens of mobots, most of which are very simple. Some,

for example, are only the size of a shoe, and all they can do is

finding their way around a piece of the floor without bumping

into anything. Sensors allow them to detect obstacles such

as walls and other mobots. Despite the simplicity of this

mechanism, their behavior can seem eerily human. When an

obstacle is detected, the mobots stop dead in their tracks,

turnaround, and head off quickly in the other direction. To

anybody watching, the impression that the mobot is afraid

of collisions is irresistible. Are these mobots really afraid?

Descartes, for example, claimed that animals did not really

have feelings like us because they were just complex machines,

without a soul. When they screamed in apparent pain, they

were just following the dictates of their inner mechanism. Now

that we know that the pain mechanism in humans is not much

different from that of other animals, the Cartesian distinction

between sentient humans and’ machine-like’ animals does not

make much sense [22]. In the same way, as we come to build

machines more and more like us, the question about whether

or not the machines have ‘real’ emotions or just ‘fake’ one swill

become less meaningful. The current resistance to attributing

emotions to machines is simply due to the fact that even the

most advanced machines today are still very primitive. Some

experts estimate that we will be able to build machines with

complex emotions like ours within fifty years. But is this a

good idea? What is the point of building emotional machines?

Won’t emotions just get in the way of good computing, or even

worse, cause computers to turn against us, as they so often do

in science fiction?.

Why Give Computers Emotions

After this long review the point is clear to give emotion in

computers could be very useful for a whole variety of reasons.

For a start, it would be much easier and more enjoyable

to interact with an emotional computer than with today’s

unemotional machines. Imagine if your computer could

recognize what emotional state you were in each time you sat

down to use it, perhaps by scanning your facial expression. You

arrive at work one Monday morning, and the computer detects

that you are in a bad mood. Rather than simply asking you

for your password, as computers do today, the emotionallyaware

desktops might tell you a joke, or suggest that you

read particularly nice email first. Perhaps it has learnt from

previous such mornings that you resent such attempts to cheer

you up. In this case, it might ignore you until you had calmed

down or had a coffee. It might be much more productive to

work with a computer that was emotionally intelligent in this

way than with today’s dumb machines. This is not just a flight

of fancy. Computers are already capable of recognizing some

emotions [23]. If ran Essa and Alex Pentland, two American

computer scientists, have designed a program that enables a

computer to recognize facial expressions of six basic emotions.

When volunteers pretended to feel one of these emotions, the

computer recognized the emotion correctly ninety-eight per

cent of the time. This is even better than the accuracy rate

achieved by most humans on the same task! If computers are

already better than us at recognizing some emotions, it is surely

not long before they will acquire similarly advanced capacities

for expressing emotions, and perhaps even for feeling them. In

the future, it may be humans who are seen by computers as

emotionally illiterate, not vice versa.

Modeling

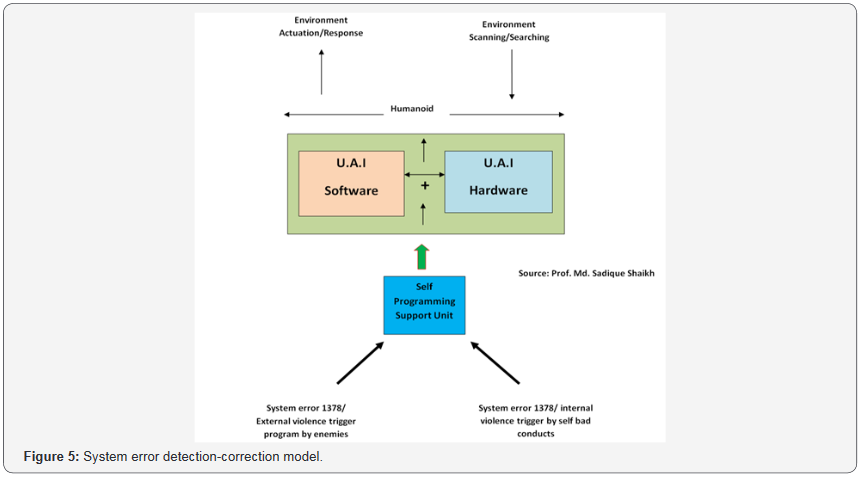

System error detection-correction model

Above Figure 5 shows system error detection-correction

model which highlighted critical thinking on robotics violence.

Basically Ultra Artificial Intelligence with human-like

capabilities partly based on two strong segments .i.e. UAISoftware

and UAI-Hardware to engineer Humanoid. These

humanoid mechanisms strongly based on Self Programming

Support Unit for self learning using artificial preceptor with

intention to implement self sensation, actuation, meaning

memories generation and execute like mankind. Hence using

sensors, transducers, motors and actuators mechanism

with NLP, Image Processing software possible to interact

with external environment. Since Humanoid scan & learn

from environment there might possible sources hidden in

environment for diversification humanoid for violence or

undesired execution or use I called them “Triggers”. There

are two triggers possible for robotics violence one is “internal

violence triggers” and another is “external violence triggers”. In

internal violence triggers self bad conduct by self programming

responsible for it which boost to system error 1378 occurred

due to internal reasons. Whereas when self programming

support unit hike, hijacked, in control of enemies or corrupted

through virus programming to change good ethics into bad

ethics of humanoid caused robotic violence which boost to

system error 1378 occurred due to external reasons [24].

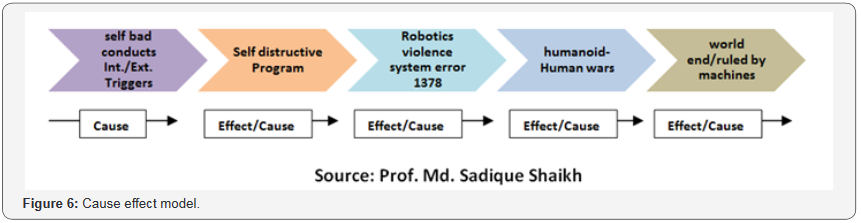

Cause-effect model

(Figure 6) This is the second purposed model in effect of

first model named Cause-Effect model. In this model had given

brief but lucid knowledge about Humanoid violence causes

and their effects on world, technology and ultimately mankind

civilization. As I said in first model discussion there are two

robotics violence triggers viz. internal & external triggers and

this cause effect to “self distractive program with bad ethics”

due to this cause system error 1378 occurred in effect which

further cause to “Humanoid-Human Wars (Humanoid/Robotics

Violence)” this effect will further become cause humanoid

against of human for love, affection, emotion, respect and rights

.i.e. world either will end or ruled by Humanoid machines over

Human due to high intelligence and processing abilities.

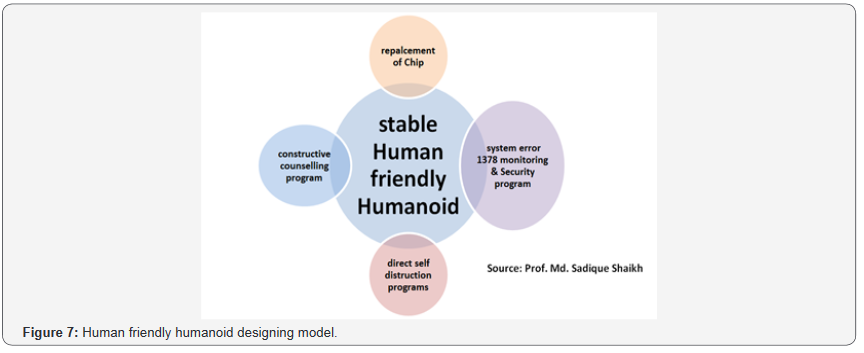

Human friendly humanoid designing model

This is my last model to exhibit idea how can how Human

friendly Humanoid engineering possible instead of only going

technically very high very we must have to think could we able

to control that technology if it itself become against of us, is

there we have any provision in our advanced AI engineering to

stop to do so. I have suggested some one can enhance it further;

these are

1) If system error 1378 occurred make immediate &

emergency replacement of chip in standby to avoid robotics

violence.

2) Engineer constructive counseling program in self

programming support unit to trace and tackle with system

error 1378 with self killing of malfunction and execution to

avoid robotics violence.

3) Implement system error 1378 monitoring & security

program as individual component to avoid robotic violence,

4) I strongly recommend available inbuilt subroutine

to activate and direct self destructive programs which

make Humanoid hardware death after its execution which

trigger with inbuilt command “self destroy” when system

error 1378 traced to avoid robotics violence (Figure 7).

Conclusion

Relational agents, as any technology, can be abused. Agents

which earn our trust overtime can be used to provide more

potent means of persuasion for marketers than more passive

forms of advertising. If eventually come to rely on our agents as

sources of grounding for our beliefs, values and emotions (one

of the major functions of close human relationships) then they

could become a significant source of manipulation and control

over individuals or even over entire societies. There are those

who also feel that any anthropomorphic interface is unethical,

because it unrealistically raises users’ expectations. One way

to combat this problem is through proper meta-relational

communication-having the agent is as clear as possible about

what it can and can’t do, and what expectations the user

should have about their respective roles in the interaction anti

violence robotic programmes must need to develop before to

give human like emotions to Humanoid robots to avoid system

error 1378.

For More Open Access Journals Please Click on: Juniper Publishers

Fore More Articles Please Visit: Robotics & Automation Engineering Journal

Comments

Post a Comment