Wireless Glove for Hand Gesture Acknowledgment: Sign Language to Discourse Change Framework in Territorial Dialect- Juniper Publishers

Juniper Publishers- Journal of Robotics

Abstract

Generally deaf-dumb people use sign language for

communication, but they find it difficult to communicate in a society

where most of the people do not understand sign language. Due to which

communications between deaf-mute and a normal person have always been a

challenging task. The idea proposed in this paper is a digital wireless

glove which can convert sign language to text and speech output. The

glove is embedded with flex sensors and a 9DOF inertial measurement unit

(IMU) to recognize the gesture. A 9-degree of freedom inertial

measurement unit (IMU) and flex sensors are used to track the

orientation of fingers and motion of hand in three dimensional spaces

which senses the gestures of a person in the form of bend of fingers and

tilt of the hand fist. This system was tried for its practicality in

changing over gesture based communication and gives the continuous

discourse yield in local dialect and additionally shows the text on GLCD

module. The text show being in English, the voice yield of the glove

will be in provincial dialect (here Kannada). So this glove goes about

as a communicator which in part encourages them to get their necessities

and an interpreter giving greater adaptability in correspondence. In

spite of the fact that the glove is planned for gesture based

communication to discourse transformation, it is a multipurpose glove

and discovers its applications in gaming, mechanical autonomy and

therapeutic field.In this paper, we propose an approach to avoid the gap

between customer and software robotics development. We define a EUD

(End-User Development) environment based on the visual programming

environment Scratch, which has already proven in children learning

computer science. We explain the interests of the environment and show

two examples based on the Lego Mindstorms and on the Robosoft Kompai

robot.

Keywords: Sign Language; Flex Sensors; State Estimation Method; 3D Space; Gesture Recognition

Abbrevations:

ANN: Artificial Neural Networks; SAD: Sum of Absolute Difference; IMU:

Inertial Measurement Unit; ADC: Analog to Digital Converter; HCI: Human

Computer Interface

Introduction

About nine thousand million people in the world are

deaf and mute. How commonly we come across these people communicating

with the normal world? The communication between a deaf and general

public is to be a thoughtful issue compared to communication between

visually impaired and general public. This creates a very small space

for them as communication being a fundamental aspect of our life. The

blind people can talk freely by means of normal language whereas the

deaf-mute people have their own manual-visual language popularly known

as sign language [1]. The development of the most popular devices for

hand movement acquisition, glove-based systems started about 30 years

ago and continues to engage a growing number of researchers. Sign

language is the non-verbal form of intercommunication used by deaf and

mute people that uses gestures instead of sound to

convey or to express fluidly a speaker’s thoughts. A gesture in a sign

language is a particular movement of the hands with a specific shape

made out of them [2]. The conventional idea for gesture recognition is

to use a camera based system to track the hand gestures. The camera

based system is comparatively less user friendly as it would be

difficult to carry around.

The main aim of this paper is to discuss the novel

concept of glove based system that efficiently translates Sign Language

gestures to auditory voice as well as text and also promises to be

portable [3]. Several languages are being spoken all around the world

and even the sign language varies from region to region, so this system

aims to give the voice output in regional languages (here Kannada). For

Sign language recognition few attempts have been made in the past to

recognize the gestures using camera, Leaf switches and copper plates but

there

were certain limitations of time and recognition rate which

restricted the glove to be portable. Mainly there were two

well-known approaches viz. Image processing technique and

another is processor and sensor based data glove [4]. These

approaches are also known as vision based and sensor based

techniques. Our system is also one such sensor based effort to

overcome this communication barrier, which senses the hand

movement through flex sensors and inertial measurement

unit and then transmits the data wirelessly to the raspberry

pi which is the main processor, that accepts digital data as

input and processes it according to instructions stored in its

memory, and outputs the results as text on GLCD display and

a voice output.

Background Work

People who are hard of hearing or quiet are isolated in

the cutting edge work environment as well as in regular daily

existence making them live in their own different networks.

For example, there have been enhancements in amplifiers and

cochlear inserts for the hard of hearing and counterfeit voice

boxes for the quiet with vocal rope harm. Be that as it may, these

arrangements don’t come without drawbacks and expenses [5].

Cochlear inserts have even caused a tremendous debate in the

hard of hearing network and numerous decline to considerably

think about such arrangements. Thusly, we trust society still

requires a compelling answer for expel the correspondence

obstruction between hard of hearing and quiet people and nonmarking

individuals. Our proposed arrangement and objective

is to plan a Human Computer Interface (HCI) gadget that can

make an interpretation of gesture based communication to

content and discourse in provincial dialect, furnishing any

hard of hearing and quiet people with the capacity to easily

speak with anybody [6]. The thought is to plan a gadget put

on a hand with sensors fit for assessing hand signals and after

that transmitting the data to a preparing unit which plays out

the communication via gestures interpretation. The last item

will have the capacity to proficiently perform gesture based

communication and give the focused on yield. We want to have

the capacity to enhance the personal satisfaction of hard of

hearing and quiet people with this gadget.

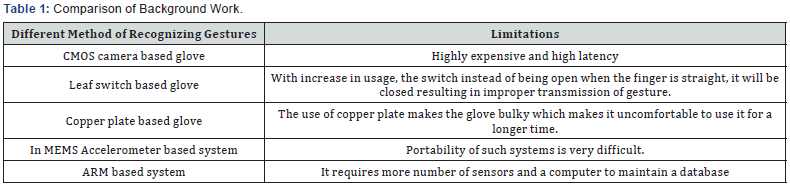

Comparison of Background Related Work

(Table 1)

Problem Definition

With a population of around 7.6 billion today communication

is a strong means for understanding each other. Around nine

thousand million individuals are deaf and mute. Individuals

with discourse hindrance their vocal articulation are not

reasonable, they require a specific skill like static state of the

hand orientation to give a sign, more as manual-visual dialect

prevalently known as sign language to communicate with

general population. They think that it’s hard to impart in a

general public where a large portion of the general population

don’t comprehend sign language. Hence forth they find a little

space to convey and do not have the capacity to impart at a

more extensive territory [7].

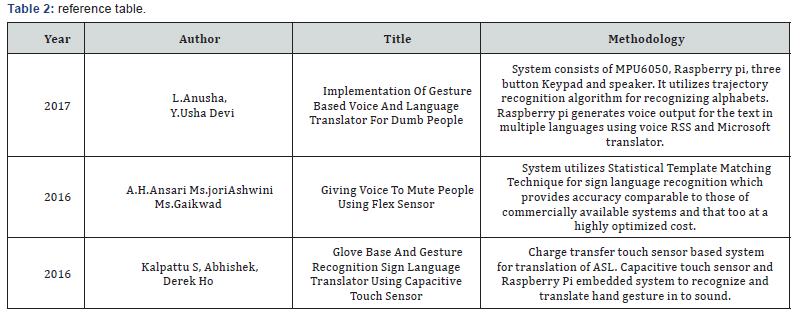

Literature Survey

The system investigates the utilization of a glove to give

communication via gestures interpretation in a way that

enhances the techniques for previous plans. Few university

research has taken an activity to make prototype devices

as a proposed answer for this issue, these devices focus on

reading and analyzing hand movement. However, they are

lacking in their capacity to join the full scope of movement

that gesture based communication requires, including

wrist revolution and complex arm developments (Table 2).

Proposed System

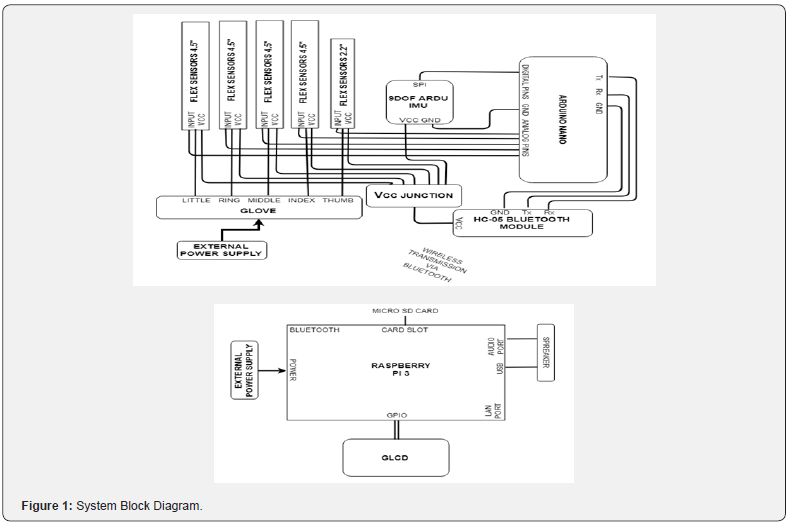

(Figure 1)

Block Diagram Explanation

The sensor based system is designed using four 4.5 inch

and two 2.2-inch flex sensors which are used to measure the

degree to which the fingers are bent. These are sensed in

terms of resistance values which is maximum for minimum

bend radius. The flex sensor incorporates a potential divider

network which is employed to line the output voltage across 2

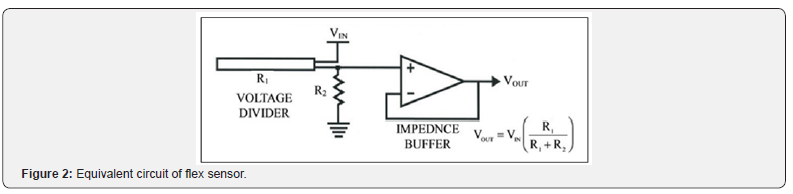

resistors connected as shown in Figure 2.

The output voltage is determined using the following

equation,

Where;

R1 - flex sensor resistance. R2 - Input resistance.

The external resistor and flex forms a potential divider that

divides the input voltage by a quantitative relation determined

by the variable and attached resistors. For particular gestures

the current will change, as a result of the changing resistance

of the flex sensor which is accommodated as analog data.

One terminal of flex sensor is connected to the 3.3Volts and

another terminal to the ground to close the circuit. A 9-Degree

of Freedom Ardu Inertial Measurement Unit (IMU) is essential

for accelerometer and gyroscope readings which is placed on

the top of the hand to determine hand position. The co-related

3D coordinates are collected by the inertial measurement

unit as input data [8]. The impedance values from flex sensors

and IMU coordinates for individual gesture are recorded

to enumerate the database. The database contains values

assigned for different finger movements. When the data is

fed from both flex sensors and IMU to Arduino nano it will be

compute and compare with the predefined dataset to detect

the precise gesture and transmitted wirelessly to the central

processor i.e. raspberry pi via Bluetooth module. Raspberry Pi

3 is programed to display text output on GLCD. Graphic LCD

is interfaced with the Raspberry pi3 using 20-bit universal

serial bus in order to avoid bread board connection between

processor and the display, and a 10Kohm trim potentiometer

is used to control the brightness of display unit. Further to

provide an auditory speech, pre-embedded regional language

voice is assigned for each conditions as similar to the text

database which is mapped with the impedance values. Two

speakers are used with single jack of 3.5mm for connection

and a USB to power-up the speakers [9]. When text is displayed,

the processor will search for the voice signal which will be

transmitted through speakers.

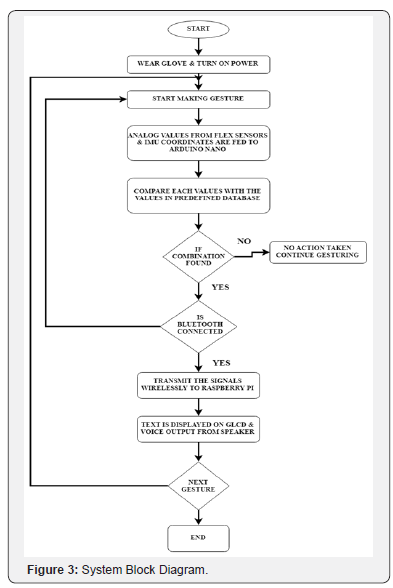

Methodology (Figure 3)

a) The gesture is served as an input to the system which

is measured by both the sensors particularly from the f lex

sensor in terms of impedance and the IMU gives the digital

values.

b) These values from the flex sensor are analog in nature

and is given to the Arduino nano which uses the analog to

digital convertor consolidated in it to convert the resistive

values to digital values.

c) IMU utilizes the accelerometer/gyroscope sensors to

measure the displacement and position of the hand.

d) These qualities from both the sensors are fed to

Arduino nano which contrasts it and the values stored

in the predefined database, and further transmits this

digital data wirelessly to the main processor by means of

Bluetooth.

e) Central processor the raspberry pi3 is coded in

python dialect for processing the received digital signals

to generate the text output, for example, characters,

numbers and pictures. Further, the text output is shown on

Graphic-LCD display and next text to speech engine, here

particularly espeak converter is utilized to give the soundrelated

voice output [10].

Finally, system effectively delivers the output as text and

auditory voice in regional dialect.

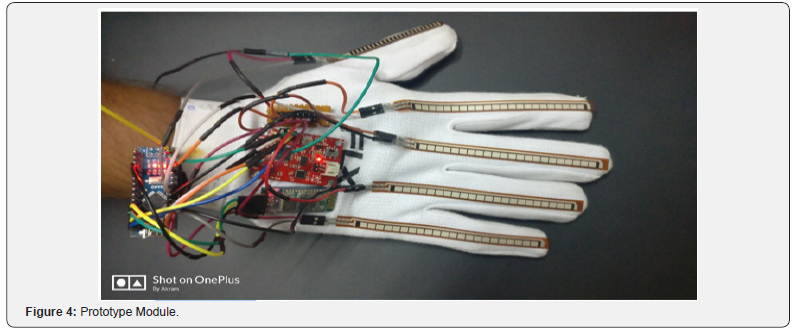

Prototype Implementation and its Working

In this system, the features are extracted from the hardware

sensor inputs and the targets are the words in sign language. To

realize the design requirements, several hardware components

were considered during prototyping. Much of the hardware

was selected due to their ease of use and accuracy. The Ardu

9DOF IMU was selected for convenience as it produces the

computation necessary for linear acceleration and gyroscopic measurements. The roll, pitch and yaw measurements were

found to be roughly ±2° accuracy which is far beyond sufficient

for our sign language requirements. Since the flex sensor

required additional analog pins, careful planning allowed us

to fit the circuit in an agreeable manner on the hand glove.

Space was managed to add in the HC05

Bluetooth module onto

the device. All the sensors must be placed in a way as to not

make contact with each other causing short circuits which and

disruption of measurement readings [11]. Electrical tape was

necessary to provide insulation for our sensors. The system

recognizes gestures made by the hand movements by wearing

the glove on which two sensors are attached, the first sensor is

to sense the bending of five fingers, the flex sensor of 2.2 inches

for thumb and for the other four fingers of 4.5 inches and the

second sensor used is 9-DOF Inertial Measurement Unit to

track the motion of hand in the three-dimensional space,

which allows us to track its movement in any random direction

by using the angular coordinates of IMU (pitch, roll and yaw).

Since the output of f lex sensor is resistive in nature the values

are converted to voltage using a voltage divider circuit.

The resistance values of 4.5-inch flex sensors range from

7K to 15K and for 2.2-inch flex sensor, it ranges from 20K to

40K, as shorter the radius the more resistor value. Another

2.5K ohm resistor is utilized to build a voltage divider circuit

with Vcc supply being 3.3volts taken from Arduino nano

processor, the voltage values from the voltage divider circuit

being analog in nature are given to the Arduino nano processor

which has an inbuilt ADC [12]. Further, the IMU senses the hand

movements and gives the digital values in XYZ direction called

the roll, yaw, pitch respectively. The values from the IMU and

values of the flex sensors are processed in the Arduino nano

which is interfaced with HC-05 Bluetooth module embedded on

the glove which provides the approximate range of 10 meters.

The data processed in nano are sent wirelessly through

Bluetooth to the central processor i.e. Raspberry Pi which is

coded in python, in a way to convert given values into the text

signal by searching in database for that particular gesture.

In accordance with the digital value received, the impedance

values along with 3 dimensional IMU coordinates for each

individual gestures are recorded to enumerate the database.

The database contains collective resistance values assigned

for different finger movements. When the computed data is

received by the processor, it is compared with the measured

dataset to detect the precise gestures. If the values matches,

then the processor sends the designated SPI commands to

display the texts according to gestures onto the GLCD and the

espeak provides the text to speech facility giving audible voice

output in regional language through the speakers. Further,

for any next gestures made, both flex sensor and IMU detects

and data is compared with the database already present in

the processor and if it matches, displays in text format as well

as audible output speech will be given by the speakers [13]

(Figure 4).

Results

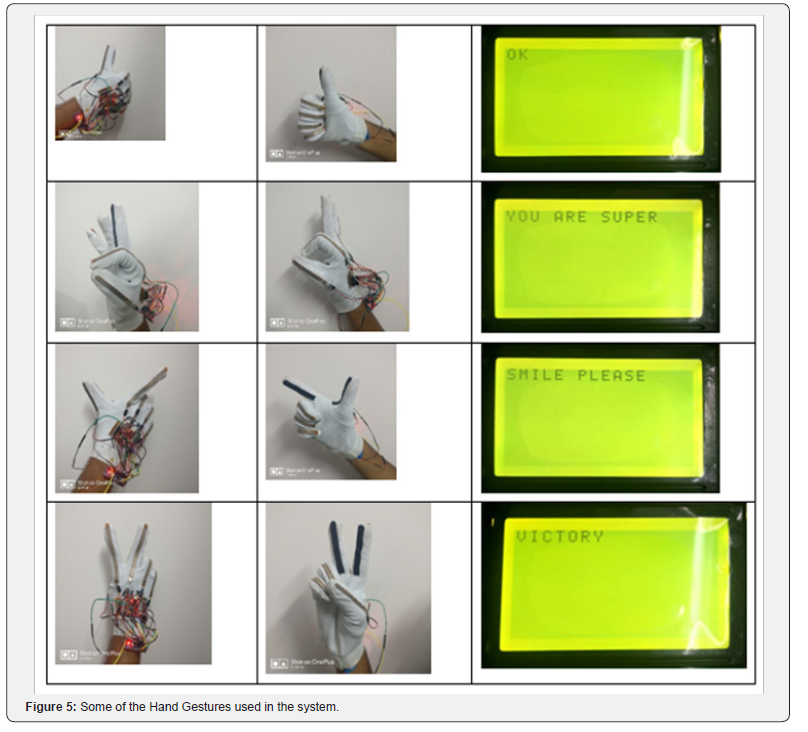

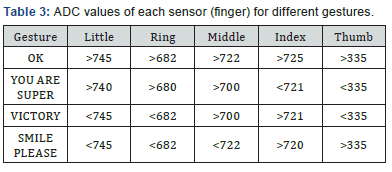

In this prototype system, the user forms a gesture and

holds it approximately for 1 or 1.5 seconds to ensure proper

recognition. Each gesture comprises of bending of all fingers

in certain angles accordingly. Every bend of the sensor (finger)

produces unique ADC value so that when different hand

gesture is made, different ADC values are produced. Taking

such ADC values for 4 different users, a table of average ADC

values for each sensor is maintained where F1, F2, F3, F4 and

F5 represents the little finger, the ring finger, the middle finger,

the index finger and thumb respectively. Table 3 shows the

gestures and corresponding words voiced out. The hand signs

taken in the prototype can be easily modified using the concept

of ADC count according to the user convenience. At the same

time the voice output can be changed easily to gives a flexibility

in change of language according to different regions (Figure 5).

Applications

a) Voice interpreter for mute people

b) No touch user interface

c) Gaming industry: Hand gestures play a vital role in

the gaming industry, especially in first person shooting

games. The player can control the character in the game

using his hand and this could give a real life experience

of the game. Also, virtual reality is gaining grounds in the

gaming industry. Combining virtual reality with the gloves

with a haptic feedback can give the gamer a real life gaming

experience.

d) Controlling a robotic arm using the gloves: The gloves

could be used to control a robotic arm. The applications

for this system are wide. With incorporation of haptic

feedback, the glove -robotic arm interface could also be

used in bomb diffusion.

e) Remote medical surgery: In this the surgeon need not

be at the physical location to perform the surgery. He could

control a robotic arm remotely to perform the surgery and a haptic feedback could give him the feel of actually

performing the surgery. But this would require the gesture

recognition to be very precise and the transfer of data from

the hand to the robotic arm should be without even a tiny

glitch.

Advantages

a) Cost effective light weight and portable

b) Real time translation approximately in no time delay

c) Flexible for ‘N’ users with easy operation

d) Fully automate system for mute communication

Future scope

a) The system can be further developed with Wi-Fi

connection and enlarged database supporting special

characters or symbols.

b) Microsoft Text To Speech (TTS) engine can be

utilized to provide compatibility for multiple international

languages.

c) An Android application can be developed for

displaying the text and speech output on an Android device.

Conclusion

As we discovered that Deaf-quiet individuals utilize

communication via gestures to cooperate with others however

numerous don’t comprehend this motion dialect. We have

built up a sensor based motion acknowledgment framework

to undercover signal into local dialect discourse and content

yield. In this framework the hard of hearing quiet individuals

wear the gloves to perform hand motion, the transformation

of content to discourse in provincial dialect and show has

been seen to be predictable and dependable. In this way, the

proposed framework with the database of 20 words and 15

sentences has been effectively created which changes over the

motions into English words or sentences and shows the yield

on GLCD and relating voice yield in provincial dialects using

espeak. The proposed framework has insignificant equipment

mounted on it which makes it dependable, convenient and

savvy and more straightforward to speak with the general

public [14]. One more requesting where this framework

could be utilized as a part without bounds: Helping hand

for individuals with Cerebral Palsy; Cerebral palsy usually

appears in early childhood and involves a group of permanent

movement disorders. The symptom varies with people and

often includes poor coordination, stiff muscles, weak muscles,

and tremors. Also, the problems with sensation, vision, and

hearing, swallowing and speaking have been identified as

other symptoms [15,16]. This problem can be solved to a great

extent by providing them with a provision to communicate

with just a single finger. The frequently used words by such

people can be put across to people with just a small movement

in the finger and using our state estimation technique it could

predict the letters or words [17].

For More Open Access Journals Please Click on: Juniper Publishers

Fore More Articles Please Visit: Robotics & Automation Engineering Journal

Comments

Post a Comment